In today’s world of wanting to automate everything, specifically, automating your CI/CD process for your Power BI datasets and dataset refreshes, you need to understand your options when it comes to the credentials you can use for your data sources.

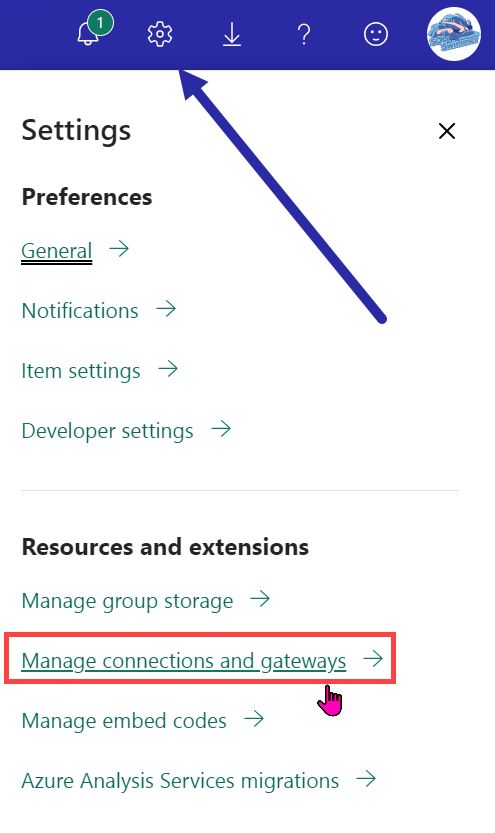

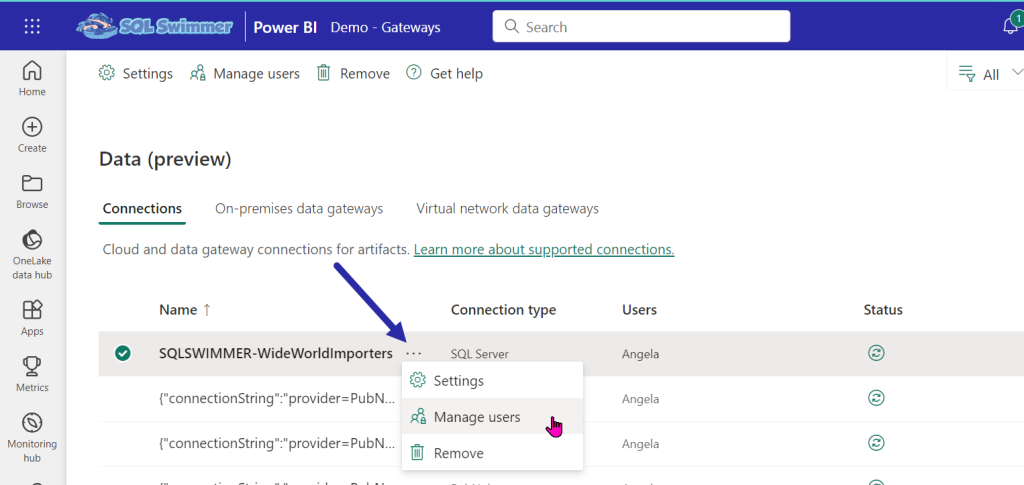

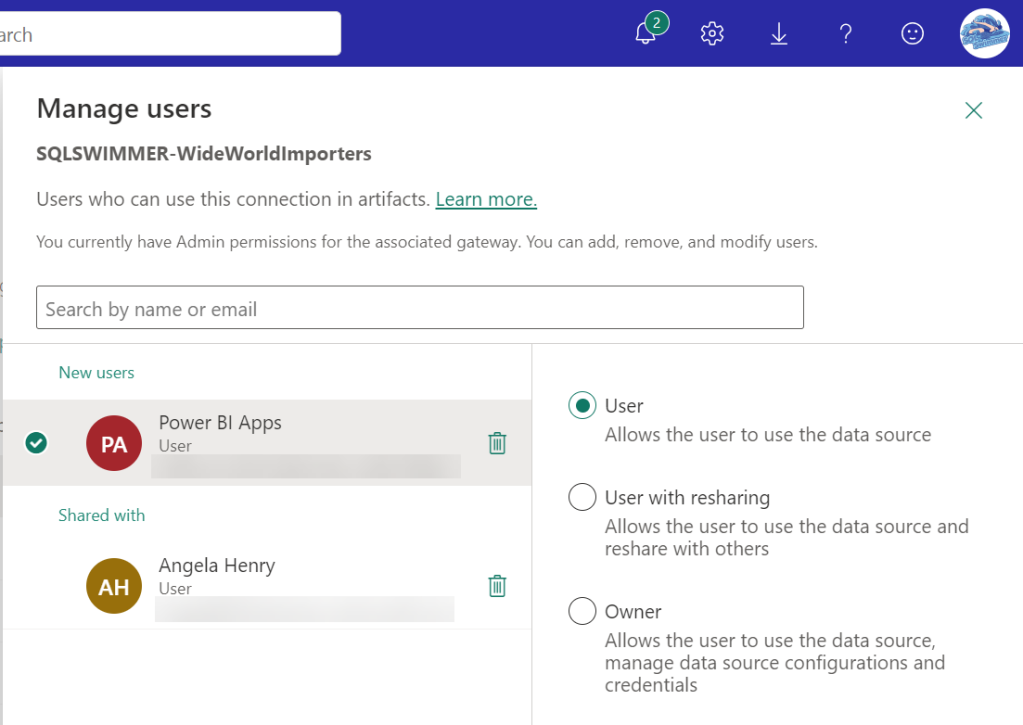

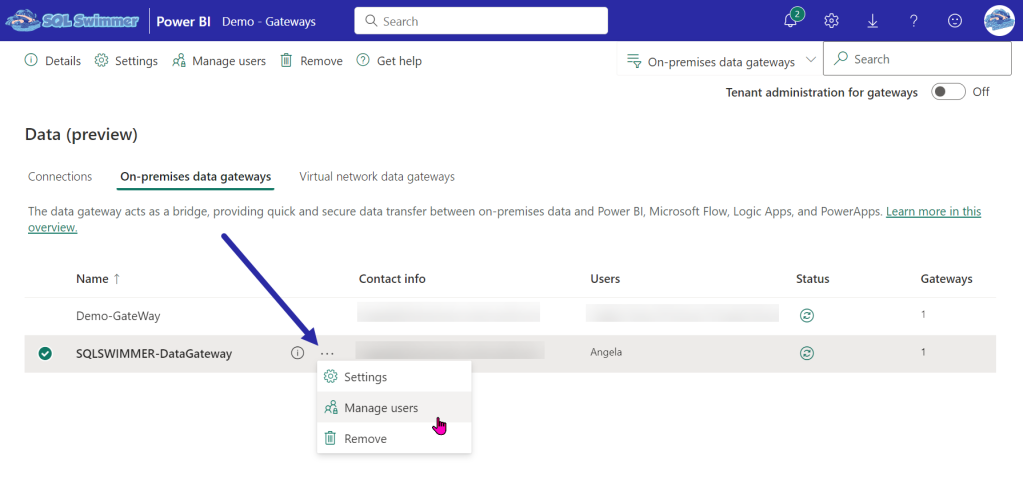

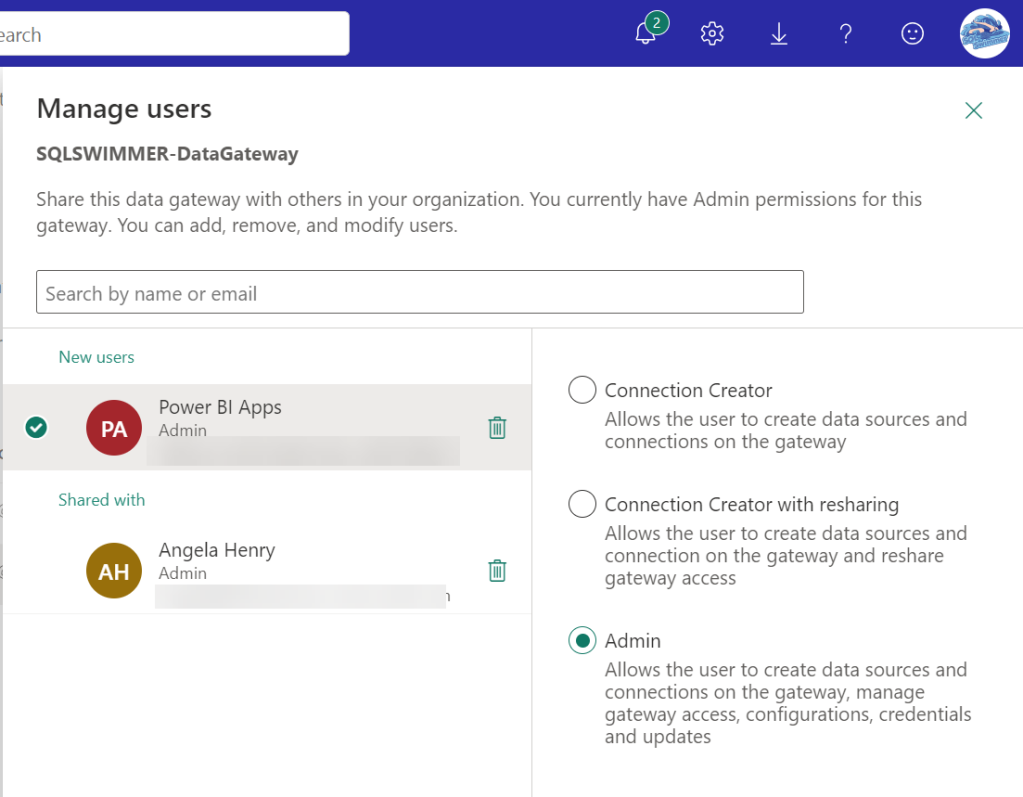

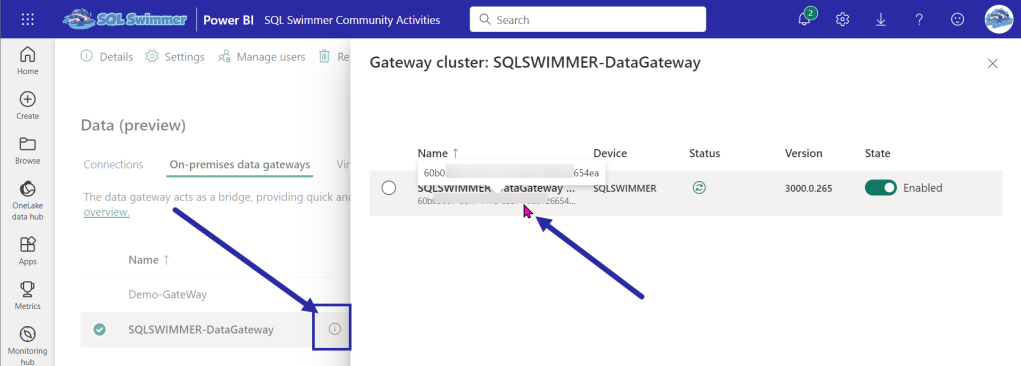

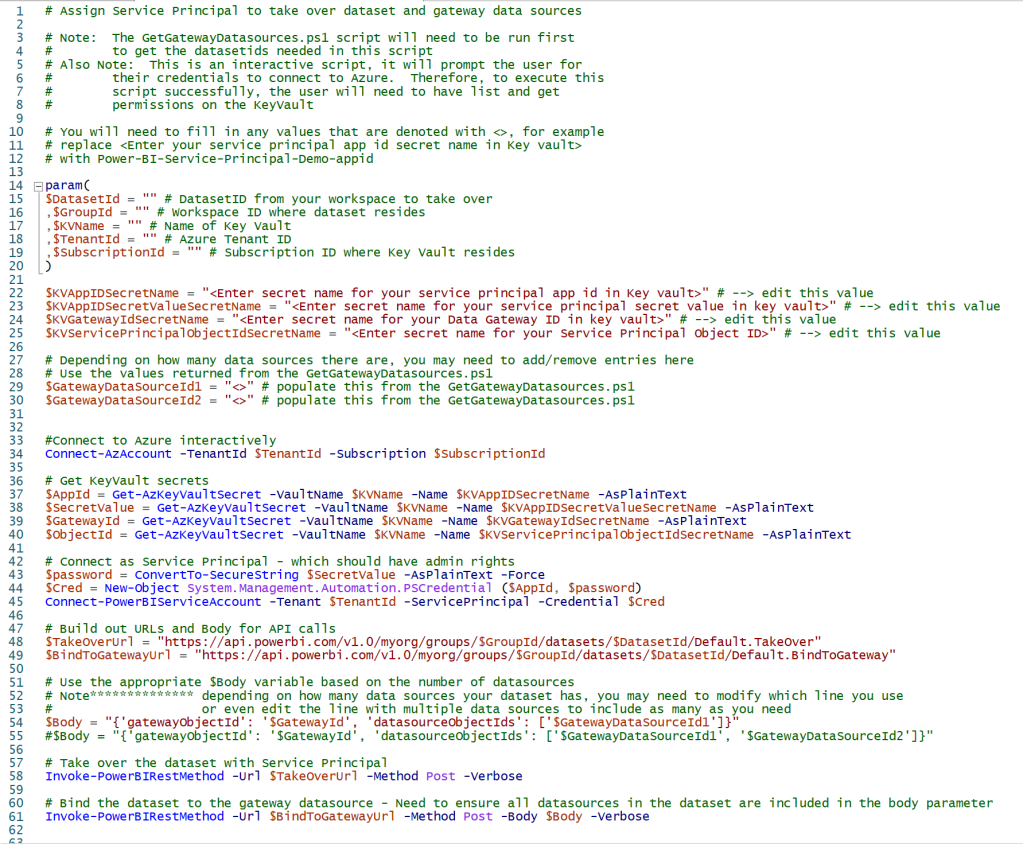

If we are using enterprise-wide datasets, we don’t want Power BI datasets owned by individuals; we want them to be owned by a Service Principal so they aren’t relying on specific individuals when things go sideways (and because we all want to go on vacation at some point). However, it’s not always clear on what credentials will actually be used for our data sources in our datasets when using a Service Principal. In a previous post, I talked about how to set up a service principal to take over a dataset when using data gateways, but one of the pre-requisites I listed was that your data sources needed to be configured with appropriate credentials. That’s where this post comes in.

You essentially have three options for data source credentials, depending on your data source type.

- Basic Authentication

- Active Directory/Azure Active Directory Authentication

- Service Principal

This post will help you understand these options and the pros/cons of each.

Basic Authentication

If you are using an on-prem data source like SQL Server or Oracle, basic authentication means you have a username and password that only exists within this data source and it’s up to the database engine to authenticate the user. In SQL Server it’s called SQL Authentication and in Oracle it’s called Local Authentication.

Pros

- Super easy to set up

- All your security is contained within the database itself

- Almost all applications can use basic authentication

Cons

- Passwords tend to get passed around like a penny in a fountain

- On the opposite end of the spectrum from above, the password is sometimes tribal knowledge and not recorded anywhere, so folks are afraid to change the password for fear of breaking something

- Maintenance can be a nightmare, it’s yet another stop on the “disable access” checklist when someone leaves a company

Active Directory/Azure Active Directory

Active directory (on-prem based) or Azure active directory (cloud based) is sometimes referred to as Windows Authentication, because this type of credential is needed to log into a machine, whether it be a laptop, desktop, server, or environment like a network, and it exists outside of the database.

Pros

- Usually a bit more secure, since accounts are usually associated with an actual person, so passwords aren’t passed around

- Usually requires interactivity (see next Pro)

- A “Service Account” can be created that is not associated with an actual person

- Can be added to Active directory/Azure active directory security groups

Cons

- Usually requires interactivity

- Not supported by all applications, but it is supported in Power BI

Service Principal

This is by far the most secure authentication method. Service Principals are created as “app registrations” in Azure Active Directory, and by nature they are not interactive.

Pros

- Most secure out of all methods listed

- Require “tokens” to access applications

- Allow you to go on vacation

Cons

- Can be difficult to setup/configure

- In most applications, Power BI included, the tokens have a very small window when they are valid (like, just an hour), which is excellent from a security perspective, but bad from an automation perspective

Summary

Which would I use? Well, it depends. What are my client’s security requirements? Is Basic Authentication even an option? Some organizations have this disabled for their on-prem systems. If I go with Active Directory/Azure Active Directory, I would most likely use a “service account”, (where the password is stored in Key Vault) then I would use a PowerShell script to assign the credentials to the data source. Lastly there’s the Service Principal. My use of this would depend on how/when I am refreshing the dataset. If it’s at the end of an ETL/ELT process that can call PowerShell scripts and I know the dataset refresh time is less than an hour, then I would definitely use this authentication method with an additional call to get a fresh token just prior to issuing a dataset refresh. It can be difficult to choose which authentication method is best for you, but hopefully this post has helped at least a little bit.